Overcoming common data quality issues in business intelligence processes is crucial for accurate decision-making. Dirty data leads to flawed analyses, missed opportunities, and ultimately, financial losses. This article dives deep into identifying common data problems like inconsistent formats, missing values, and duplicates, offering practical solutions and strategies for data cleansing, preparation, and governance. We’ll explore effective techniques, powerful tools, and measurable improvements, ensuring your BI processes are fueled by clean, reliable data.

From understanding the impact of inconsistent data on reporting accuracy to implementing robust data governance strategies, we’ll cover the entire spectrum of data quality management. We’ll also examine how data quality tools and technologies can help you monitor data health in real-time, providing valuable insights into your data’s integrity. Finally, we’ll explore case studies illustrating both the pitfalls of poor data quality and the rewards of a well-managed data environment.

Identifying Common Data Quality Issues: Overcoming Common Data Quality Issues In Business Intelligence Processes

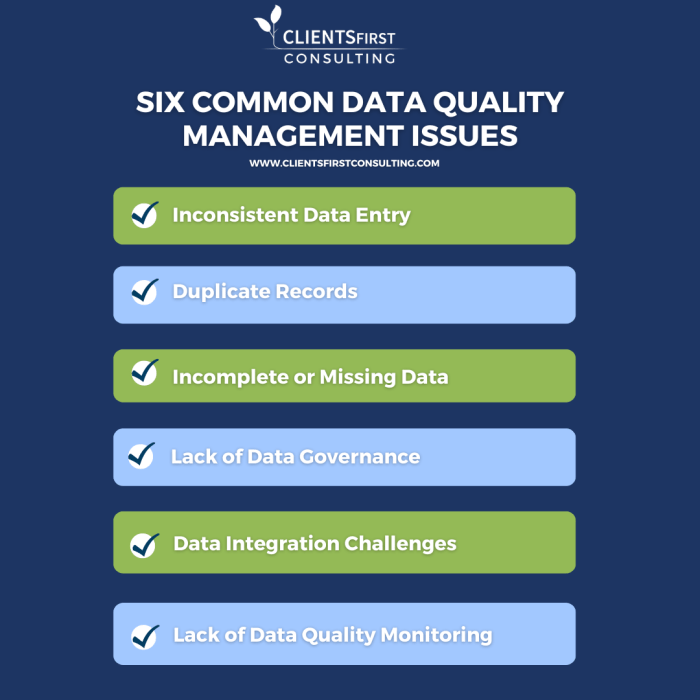

Data quality is the backbone of any successful business intelligence (BI) strategy. Poor data quality leads to inaccurate reports, flawed analyses, and ultimately, poor decision-making. Understanding and addressing common data quality issues is crucial for leveraging the full potential of your BI initiatives. Let’s dive into the most prevalent problems.

Common Data Quality Issues in Business Intelligence

Data quality problems can significantly impact the reliability and usefulness of BI reports. The following table categorizes ten frequent issues. Addressing these proactively ensures your BI system delivers accurate and actionable insights.

| Issue | Description | Issue | Description |

|---|---|---|---|

| Inconsistent Data Formats | Dates, numbers, and text fields may be entered differently (e.g., MM/DD/YYYY vs. DD/MM/YYYY). | Missing Values | Incomplete data entries lead to gaps in analysis and unreliable results. |

| Duplicate Data | Identical records create inflated counts and skew statistical analyses. | Data Entry Errors | Typos, incorrect values, and omissions compromise data accuracy. |

| Invalid Data | Data that doesn’t conform to defined rules or data types (e.g., text in a numerical field). | Outdated Data | Using stale information leads to decisions based on outdated realities. |

| Inaccurate Data | Incorrect values entered intentionally or unintentionally. | Non-Uniform Data | Data collected using different methods or standards creates inconsistencies. |

| Lack of Data Integrity | Relationships between data points are not correctly maintained. | Data Silos | Data scattered across various systems makes it difficult to obtain a unified view. |

Impact of Inconsistent Data Formats on BI Reporting Accuracy

Inconsistent data formats are a major source of errors in BI reporting. For example, imagine a sales report where dates are entered in various formats. Aggregating this data will be problematic, leading to inaccurate sales figures for specific periods. Similarly, inconsistent number formats (e.g., using commas or periods as decimal separators) can cause calculation errors and distort financial analysis.

Standardizing data formats is paramount for reliable BI reporting. This often involves data cleansing and transformation processes before data is loaded into the BI system. A company analyzing customer demographics might find inconsistencies in age data, with some entries using years and others using age ranges. This can skew age-based marketing segmentations and campaign effectiveness analyses.

Consequences of Missing Values in Datasets Used for Business Intelligence, Overcoming common data quality issues in business intelligence processes

Missing values significantly hamper the accuracy and reliability of BI analyses. They can lead to biased results, inaccurate predictions, and ultimately, poor decision-making. For instance, if a significant portion of customer survey responses are missing, the conclusions drawn from the survey might not be representative of the entire customer base. Techniques like imputation (estimating missing values) can be employed, but they introduce uncertainty and can affect the overall analysis.

The choice of imputation method should be carefully considered, as different methods can yield different results. For example, a retail company analyzing customer purchase data might encounter missing values for certain product categories. This can result in an underestimation of the popularity of those products and influence inventory management decisions.

Challenges Posed by Duplicate Data Entries in BI Systems

Duplicate data entries inflate data counts, leading to skewed analyses and inaccurate reporting. For example, duplicate customer records can result in inflated customer counts, inaccurate customer segmentation, and miscalculation of marketing campaign effectiveness. Identifying and removing duplicates is crucial for maintaining data integrity. However, identifying duplicates can be challenging, especially in large datasets with subtle variations in data entries.

Data deduplication techniques are necessary to ensure data accuracy and consistency. A financial institution analyzing transaction data might find duplicate entries due to system errors or data entry mistakes. This can lead to incorrect balance calculations and financial reporting inaccuracies.

Data Cleansing and Preparation Techniques

Data cleansing and preparation are crucial steps in any successful business intelligence process. Dirty data leads to inaccurate insights and flawed decision-making, ultimately hindering your organization’s growth. By effectively cleaning and preparing your data, you lay the foundation for robust and reliable analytics. This section explores several techniques to achieve this.

Effective Data Cleansing Techniques

Addressing common data quality problems requires a multi-pronged approach. The following five techniques are essential for ensuring your data is ready for analysis.

- Handling Missing Values: Missing data is a pervasive issue. Techniques like imputation (replacing missing values with estimated ones) or deletion (removing rows or columns with missing data) are employed, depending on the extent and nature of the missingness. For example, if a small percentage of values are missing in a specific column, imputation using the mean or median might be appropriate.However, if missing data is extensive or exhibits patterns, deletion might be a better strategy.

- Detecting and Correcting Outliers: Outliers, or data points significantly different from the rest, can skew results. Identifying outliers using methods like box plots or z-scores allows for investigation. They may be errors, requiring correction, or genuine extreme values that should be handled appropriately. For instance, a customer’s age listed as 200 would be clearly an outlier and needs correction.

- Data Deduplication: Duplicate records inflate data size and lead to skewed analysis. Deduplication involves identifying and removing duplicates, either by comparing key fields or using more sophisticated techniques like fuzzy matching for records with slight variations. Consider a customer database; deduplication ensures each customer is represented only once.

- Data Transformation: This involves changing the format or structure of data to improve its usability. For example, converting categorical data into numerical data using techniques like one-hot encoding facilitates certain types of analysis. Similarly, scaling or normalizing numerical data can improve the performance of algorithms.

- Consistency Checks: This involves verifying the consistency of data across different fields and sources. For example, checking for inconsistencies between a customer’s address in two different databases, or ensuring dates are formatted uniformly. These checks help identify and correct errors introduced during data entry or transfer.

Data Standardization

Data standardization ensures consistency in data format and structure. This involves converting data into a common format, such as using a standard date format (YYYY-MM-DD) or a consistent currency symbol. Standardization improves data integration, reduces ambiguity, and facilitates accurate analysis. For example, standardizing address formats across different databases ensures accurate geocoding and improved reporting on customer location.

Handling Missing Data Using Imputation

Handling missing data effectively requires a systematic approach. The following steps Artikel a process using imputation methods:

- Identify Missing Data: Determine the extent and pattern of missing data using visualizations and summary statistics.

- Choose an Imputation Method: Select an appropriate imputation technique based on the nature of the data and the pattern of missingness. Methods include mean/median imputation, k-Nearest Neighbors (k-NN) imputation, and multiple imputation.

- Impute Missing Values: Apply the chosen method to replace missing values with estimated values.

- Validate Imputation: Assess the impact of imputation on the data distribution and the overall analysis. Compare results with and without imputation to ensure the imputed values don’t unduly influence the results.

Data Validation Techniques

Various data validation techniques ensure data accuracy and reliability. These techniques can be broadly categorized into:

- Range Checks: Verify that values fall within a predefined range. For example, ensuring that age values are within a plausible range (0-120).

- Format Checks: Ensure data conforms to a specific format, such as date or phone number formats.

- Cross-field Checks: Verify consistency across different fields. For instance, checking if the calculated total matches the sum of individual items on an invoice.

- Uniqueness Checks: Ensure that values are unique within a specific field. For example, checking for duplicate customer IDs.

- Check Digits: Employ algorithms to verify the accuracy of numerical data, often used in identification numbers.

Different techniques are chosen based on the specific data and the nature of potential errors. For instance, range checks are useful for detecting outliers, while format checks ensure data consistency and ease of processing.

Implementing Data Governance Strategies

Data governance is the cornerstone of any successful business intelligence (BI) strategy. Without a robust framework for managing data quality, your insights will be unreliable, leading to flawed decision-making and ultimately, hindering business growth. Implementing effective data governance isn’t just about fixing problems; it’s about proactively preventing them and building a culture of data accountability.Data governance strategies provide a structured approach to defining, enforcing, and improving data quality across an organization.

This involves establishing clear roles, responsibilities, and processes for data management, ensuring that data is accurate, consistent, and readily available for analysis. A well-defined data governance framework acts as a preventative measure, reducing the need for extensive data cleansing efforts down the line. This translates to cost savings, increased efficiency, and more reliable BI outcomes.

Creating a Data Governance Framework

A comprehensive data governance framework typically begins with defining data quality dimensions. These dimensions—such as accuracy, completeness, consistency, timeliness, and validity—provide a clear standard against which data quality can be measured. The framework then Artikels processes for data discovery, profiling, and monitoring, ensuring that data meets these defined quality standards. This might involve creating data dictionaries, defining data ownership, and establishing clear escalation paths for data quality issues.

For instance, a company might establish a data quality council comprising representatives from various departments to oversee the implementation and enforcement of data governance policies.

Defining and Enforcing Data Quality Rules

Data quality rules are specific criteria used to assess whether data meets the defined quality dimensions. These rules can be implemented using various tools and techniques, ranging from simple data validation checks within databases to sophisticated rule engines integrated with BI platforms. For example, a rule might stipulate that all customer addresses must contain a valid postal code, or that all sales transactions must have a corresponding invoice number.

Tackling messy data in business intelligence is crucial for accurate insights. A key strategy involves leveraging robust ERP systems for data management; implementing a system like sap as an erp system can significantly improve data accuracy and consistency. This, in turn, streamlines the BI process, leading to better decision-making and a healthier bottom line.

Enforcement involves automating checks to identify data that violates these rules and triggering alerts or workflows to address the discrepancies. This proactive approach helps ensure that only high-quality data enters the BI system. Regular audits and reviews of these rules are crucial to ensure their continued effectiveness and relevance.

Best Practices for Data Governance in Business Intelligence

The following table Artikels key best practices for establishing effective data governance within a BI environment. Adherence to these practices significantly contributes to improving data quality and maximizing the value derived from BI initiatives.

| Practice | Description | Benefits | Example |

|---|---|---|---|

| Establish clear data ownership | Assign responsibility for data accuracy and quality to specific individuals or teams. | Improved accountability and faster resolution of data issues. | Assigning a data steward for each key data asset (e.g., customer data, sales data). |

| Implement data quality monitoring tools | Use software to automatically track and report on data quality metrics. | Proactive identification and resolution of data quality problems. | Using a data quality monitoring tool to flag records with missing or inconsistent values. |

| Develop and enforce data quality rules | Define specific rules for data accuracy, completeness, and consistency, and enforce them through automated checks. | Improved data accuracy and consistency. | Implementing a rule that prevents the entry of duplicate customer records. |

| Regularly review and update data governance policies | Ensure that policies remain relevant and effective over time. | Adaptability to changing business needs and data sources. | Conducting an annual review of data governance policies and making necessary adjustments. |

Stakeholder Roles and Responsibilities

Effective data governance requires the collaboration of various stakeholders, each with specific roles and responsibilities. These roles often overlap, but clear definitions ensure accountability and efficient data management. For instance, Data Stewards are responsible for the quality of specific data assets, while Data Owners have ultimate responsibility for the data within their domain. The Data Governance Council provides overall oversight and guidance, ensuring alignment with business objectives.

IT plays a crucial role in implementing and maintaining the technical infrastructure supporting data governance initiatives. Finally, Business Users are essential for providing feedback and ensuring that data meets their needs. Clear communication and collaboration among these stakeholders are paramount to success.

Utilizing Data Quality Tools and Technologies

Harnessing the power of specialized software is crucial for effectively managing and improving data quality. These tools automate many tedious tasks, provide insightful visualizations, and ultimately streamline the entire data quality process, leading to better business decisions. Choosing the right tools depends on your specific needs and existing infrastructure, but several categories of software stand out as essential.Data profiling and monitoring tools are indispensable for understanding the current state of your data and identifying potential problems.

These tools analyze data sets, identifying inconsistencies, missing values, and other anomalies. This allows for proactive intervention and prevents these issues from propagating throughout your BI processes.

Data Profiling and Monitoring Tools

Several software solutions excel at data profiling and monitoring. For example, Informatica PowerCenter offers comprehensive data quality capabilities, including profiling, cleansing, and monitoring. Similarly, Talend Open Studio provides a range of tools for data integration and quality management, with a strong emphasis on open-source components. These platforms typically feature automated routines for identifying data anomalies, such as outliers or inconsistencies in data types.

They also often provide detailed reports and visualizations to aid in understanding the identified issues and their impact. Another popular choice is Collibra, known for its data governance capabilities, which includes robust data quality monitoring features. These tools help organizations maintain a comprehensive view of data health and pinpoint areas needing attention.

Data Quality Dashboards: Real-Time Insights

Data quality dashboards offer a centralized view of data health, providing real-time insights into key metrics. Imagine a dashboard displaying the percentage of complete records, the number of duplicate entries, or the prevalence of invalid data values. This visual representation allows stakeholders to quickly assess the overall quality of their data and identify potential problems. Effective dashboards often use color-coding and other visual cues to highlight areas needing immediate attention.

For example, a red indicator might signify a critical data quality issue requiring immediate action, while a green indicator would suggest that a specific data set is healthy. This intuitive presentation of data enables faster decision-making and proactive problem-solving. Moreover, dashboards can track key metrics over time, providing valuable insights into trends and the effectiveness of implemented data quality initiatives.

Integrating Data Quality Tools with BI Platforms

Integrating data quality tools directly into your existing BI platform offers significant advantages. This integration streamlines workflows, ensuring that data quality checks are performed automatically as part of the data processing pipeline. It eliminates the need for manual data transfers and reduces the risk of errors. For instance, integrating a data quality tool with a BI platform like Tableau or Power BI allows for automated data cleansing and validation before data is loaded into the BI environment.

This ensures that the data used for reporting and analysis is accurate and reliable, leading to more trustworthy insights. This integrated approach promotes a more efficient and effective data management process, reducing the time and resources needed to maintain data quality.

Features of a Robust Data Quality Management System

A robust data quality management system possesses several key features. It should include automated data profiling capabilities to quickly assess data quality. Comprehensive data cleansing and transformation functionalities are essential to address identified issues. Real-time data monitoring is critical for proactive identification of problems. Furthermore, a strong data governance framework ensures consistent data quality across the organization.

Finally, reporting and visualization capabilities are necessary to track progress and communicate findings effectively. A system incorporating all these elements ensures data quality remains a top priority, driving more reliable and trustworthy business intelligence.

Measuring and Improving Data Quality

Data quality is not a destination, but a continuous journey. Regularly measuring and improving your data quality is crucial for ensuring your business intelligence initiatives deliver accurate, reliable insights that drive informed decision-making. Ignoring data quality issues can lead to flawed analyses, missed opportunities, and ultimately, poor business outcomes. This section Artikels a practical approach to measuring, tracking, and improving data quality within your organization.A robust data quality measurement system requires a multi-faceted approach, encompassing various aspects of data health.

It’s not enough to simply assess one or two metrics; a comprehensive strategy is necessary to gain a holistic understanding of your data’s overall quality.

Data Quality Measurement Methodology

Establishing a standardized method for measuring data quality is the cornerstone of any successful improvement program. This involves defining key data quality dimensions relevant to your business needs, selecting appropriate metrics for each dimension, and establishing thresholds to define acceptable levels of quality. For example, you might focus on dimensions like accuracy, completeness, consistency, timeliness, and validity. Each dimension would then have associated metrics, such as the percentage of missing values (completeness), the number of duplicate records (uniqueness), or the percentage of records with incorrect data types (validity).

Setting thresholds helps determine when corrective action is needed. For instance, a completeness threshold of 98% might be acceptable for customer address data, but 99.9% might be required for financial transaction data.

Tracking Data Quality Improvements Over Time

Tracking key data quality metrics over time allows you to monitor the effectiveness of your improvement initiatives and identify areas needing further attention. This involves establishing a baseline measurement of your current data quality, implementing data quality improvement strategies, and then regularly re-measuring the same metrics to track progress. Visualizing this data through charts and graphs, such as trend lines or control charts, makes it easier to identify patterns and pinpoint areas where improvements are most needed.

For example, if the percentage of invalid email addresses consistently decreases over several months, it indicates the success of your data cleansing efforts. Conversely, a persistent high rate of incomplete customer records might signal a need for improved data entry procedures.

Interpreting Data Quality Reports

Data quality reports should provide a clear and concise overview of the current state of your data, highlighting areas of concern and potential improvement. These reports should be easily understandable by both technical and non-technical stakeholders. Analyzing these reports involves identifying metrics that fall below established thresholds, investigating the root causes of these issues, and prioritizing areas for improvement based on business impact.

For example, a report might reveal a high rate of inaccurate customer phone numbers, leading to difficulties in customer service. Understanding the source of these inaccuracies, whether it’s data entry errors or outdated information, is crucial for developing effective solutions.

Establishing a Continuous Data Quality Improvement Process

A continuous data quality improvement process is essential for maintaining high data quality standards over time. This involves integrating data quality checks into all stages of the data lifecycle, from data collection and entry to data storage and analysis. It also requires establishing a feedback loop where data quality issues are identified, addressed, and monitored for recurrence. This might involve regular data quality audits, automated data validation rules, and a dedicated team responsible for data quality management.

Consider implementing a system of regular data quality reviews – perhaps monthly or quarterly – where key metrics are reviewed, issues are discussed, and improvement plans are developed and tracked. This ongoing process ensures data quality remains a top priority, leading to more reliable and insightful business intelligence.

Case Studies

Real-world examples often paint a clearer picture than theoretical explanations. Let’s dive into some hypothetical case studies showcasing the impact of both poor and excellent data quality management on business intelligence outcomes. These examples highlight the tangible benefits of prioritizing data quality.

Poor Data Quality Leading to Erroneous Business Decisions

Imagine “FreshFoods,” a rapidly growing grocery chain. Their outdated BI system relied on inconsistent data from various sources – some manually entered, others automatically uploaded with minimal validation. This resulted in inaccurate inventory reports. Based on flawed data suggesting high demand for organic kale, FreshFoods overstocked, leading to significant spoilage and financial losses. Simultaneously, a shortage of popular gluten-free pasta went unnoticed due to data entry errors, resulting in lost sales and frustrated customers.

The inaccurate data led to poor forecasting, ineffective inventory management, and ultimately, a decrease in profitability. The company’s marketing campaigns, targeted based on faulty customer segmentation data, also underperformed, further impacting their bottom line. The overall impact was a 15% reduction in quarterly profits and a noticeable decline in customer satisfaction.

Effective Data Quality Management Improving BI Outcomes

In contrast, consider “TechSolutions,” a tech startup. They implemented a robust data governance strategy from the outset. This included data cleansing, validation rules, and regular data quality audits. Before implementing these measures, their customer churn rate was a concerning 20%. After implementing their data quality initiatives, they were able to identify key factors contributing to churn – slow customer support response times and insufficient onboarding assistance.

By addressing these issues, TechSolutions reduced their customer churn rate by 10% within six months. Their improved data quality also allowed for more accurate sales forecasting, resulting in a 5% increase in sales revenue within the same period. The improved accuracy of their marketing campaigns, based on accurate customer segmentation, resulted in a 12% increase in conversion rates.

The overall impact was a significant improvement in profitability, customer retention, and business growth.

Visual Representation of Data Quality Improvement

Imagine two bar charts side-by-side. The left chart, representing data

- before* data quality improvements, shows uneven, jagged bars of varying heights. Many bars are clustered together, making it difficult to discern individual data points. The chart’s title might be “Customer Churn Rate – Before Data Cleansing,” and the y-axis would display a high churn rate (perhaps 20%). The bars themselves would be inconsistently colored and labeled, reflecting the messy, inconsistent nature of the original data.The right chart, representing data

- after* improvements, shows smooth, evenly spaced bars with clearly defined heights. The chart’s title would be “Customer Churn Rate – After Data Cleansing,” and the y-axis would show a significantly lower churn rate (around 10%). The bars are consistently colored and labeled, indicating improved data consistency and accuracy. This visual comparison clearly demonstrates the transformation achieved through effective data quality management, highlighting the improved clarity and reliability of the data.The difference is striking, visually showcasing the impact of the data quality improvement initiative.